大数据工程师大数据分析师

```html

Understanding the Role of a Big Data Engineer

Big data engineering is a critical field within the realm of data science, focusing on the development and maintenance of the infrastructure required to store, process, and analyze vast amounts of data. A big data engineer plays a pivotal role in designing, building, and managing the data pipelines and systems that enable organizations to extract valuable insights from their data assets. Let's delve deeper into the key responsibilities, skills, and best practices associated with being a big data engineer.

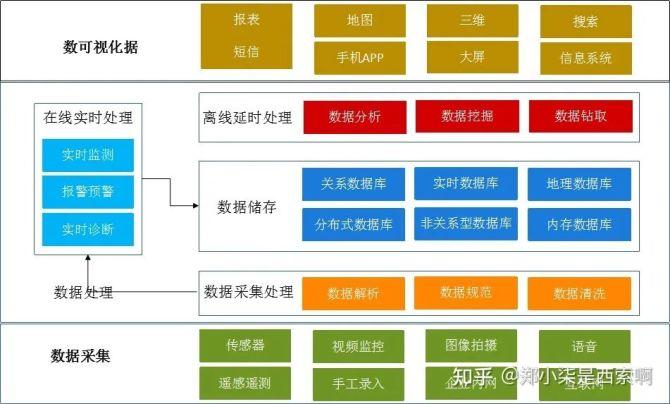

1. Data Infrastructure Design and Development: Big data engineers are responsible for designing and implementing scalable, reliable, and efficient data storage and processing systems. This involves selecting appropriate technologies such as Hadoop, Apache Spark, or Apache Kafka, and designing data architectures that can handle diverse data sources and formats.

2. Data Pipeline Development: Building robust data pipelines is essential for ingesting, transforming, and delivering data to downstream applications and analytics platforms. Big data engineers design and develop ETL (Extract, Transform, Load) processes to ensure the timely and accurate flow of data throughout the system.

3. Performance Optimization: Optimizing the performance of data processing systems is crucial for ensuring timely insights and efficient resource utilization. Big data engineers monitor system performance, identify bottlenecks, and implement optimizations to enhance throughput and reduce latency.

4. Data Security and Compliance: Safeguarding sensitive data and ensuring compliance with regulatory requirements are top priorities for big data engineers. They implement security measures such as encryption, access controls, and auditing to protect data assets and maintain regulatory compliance.

1. Proficiency in Big Data Technologies: Big data engineers should have a deep understanding of various big data technologies and frameworks such as Hadoop, Spark, Kafka, HBase, and others. They should be proficient in programming languages like Java, Python, or Scala.

2. Database Management: Strong knowledge of database systems, both relational and nonrelational, is essential for designing and optimizing data storage solutions. Experience with SQL and NoSQL databases is highly desirable.

3. ETL and Data Integration: Big data engineers should be skilled in designing and implementing ETL processes to extract, transform, and load data from disparate sources into a unified data warehouse or lake. Familiarity with tools like Apache NiFi or Talend is beneficial.

4. Cloud Computing: With the increasing adoption of cloud platforms for big data processing, knowledge of cloud services such as AWS, Azure, or Google Cloud Platform is invaluable. Big data engineers should be proficient in deploying and managing data pipelines on cloud infrastructure.

1. Design for Scalability: When designing data systems, prioritize scalability to accommodate growing data volumes and user demands. Use distributed computing techniques and scalable architectures to ensure that the system can handle increased loads.

2. Automate Processes: Automate routine tasks such as data ingestion, transformation, and deployment to streamline operations and reduce manual effort. Leveraging tools like Apache Airflow or Kubernetes can help automate workflow orchestration and management.

3. Monitor Performance Continuously: Implement robust monitoring and logging mechanisms to track system performance and detect anomalies proactively. Use monitoring tools like Prometheus, Grafana, or ELK stack to gain insights into system behavior and performance metrics.

4. Stay Updated with Emerging Technologies: The field of big data is constantly evolving, with new technologies and frameworks emerging regularly. Stay abreast of industry trends and innovations, and continuously update your skills to remain competitive in the field.

In conclusion, big data engineering is a dynamic and challenging field that requires a blend of technical expertise, problemsolving skills, and a deep understanding of data principles. By mastering key technologies, adopting best practices, and staying updated with industry trends, big data engineers can effectively harness the power of data to drive insights and innovation in organizations.